TL;DR Version: Are conscious A.I.s possible, and if so, what will that mean, legally speaking?

Okay, I was having trouble focusing in class today, so I accidentally thought of a few serious debate style questions that I'd love to hear input on. Here's the first one! (It's a bit out there, but it's interesting to think about, so try to take it a bit seriously, even if it sounds funny.)

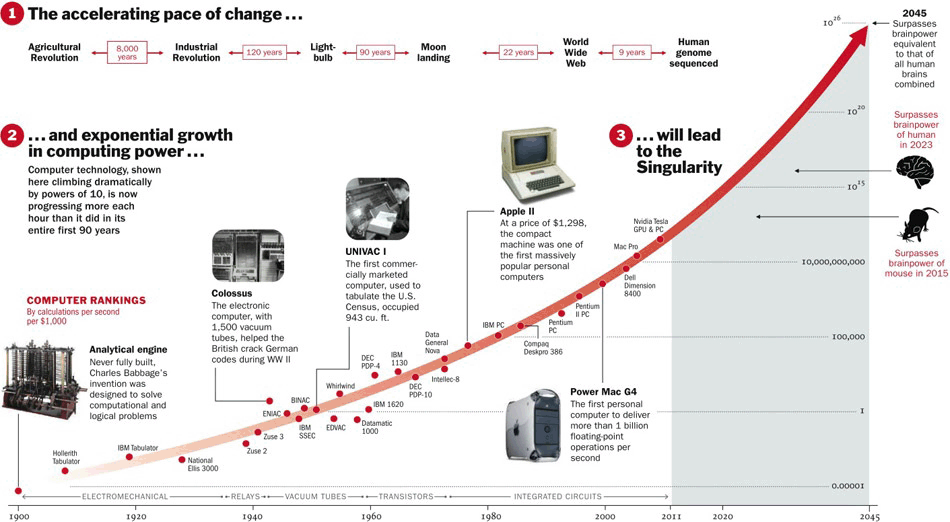

Are you familiar with Moore's Law? If not, here's an image to explain. Sorry it's kinda small, I don't know how to fix it…:

Anyway, apparently, in around 10 years, we could have computers that are as "smart" as humans. Here's a "spoiler" button that's actually just a bunch of extra optional info and caveats, but only if you are interested.

So what happens if that's true? 30 years down the line, would that mean we could have self-aware computers? Would that mean that they could form identities, thoughts, and desires? Would that make them "people" in a legal sense, and would they deserve rights?

Here's some example questions: Would they be able to vote? Could they get married (and only to other A.I.'s or to other humans?) Would they be able to adopt human children, or if two A.I. created a new A.I that developed just like a child, would they be able to legally be considered giving "birth" to said "child"? And what about workplace protections? Would we need to put some in place for the "inferior" humans or some for the "soulless robots" or both? What about workplace and safety protections for beings that don't have to feel pain? Would they be able to join the army, since they could possibly be hacked? Should they be able to "back themselves up" onto a cloud or something so they would be more or less immortal?